Mingde Zhao, Zhen Liu, Sitao Luan, Shuyuan Zhang, Doina Precup, Yoshua Bengio

[NeurIPS 2021] This paper received media coverage from Synced Review, The Mila Blog (available in English & French), MarkTechPost, etc.

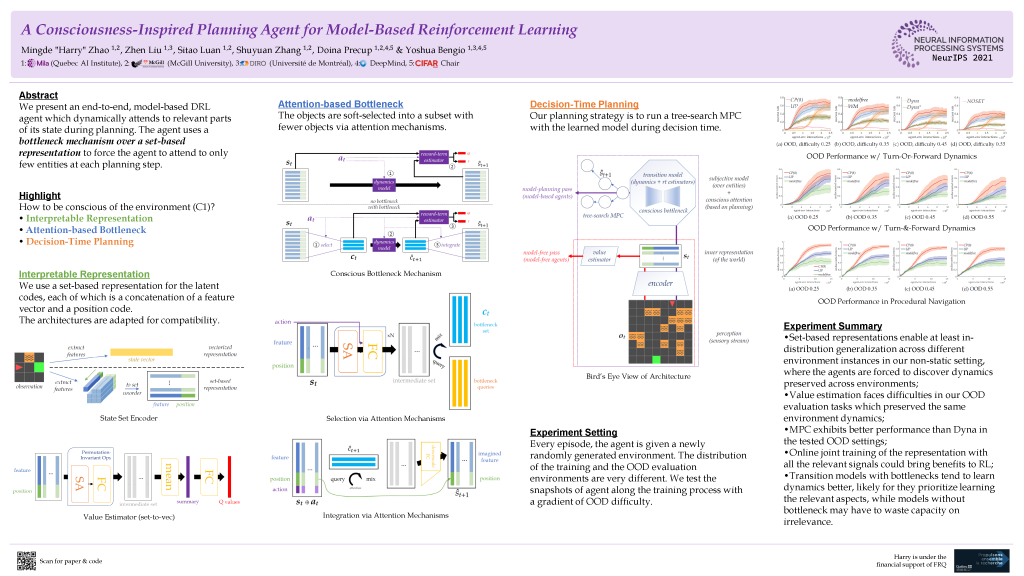

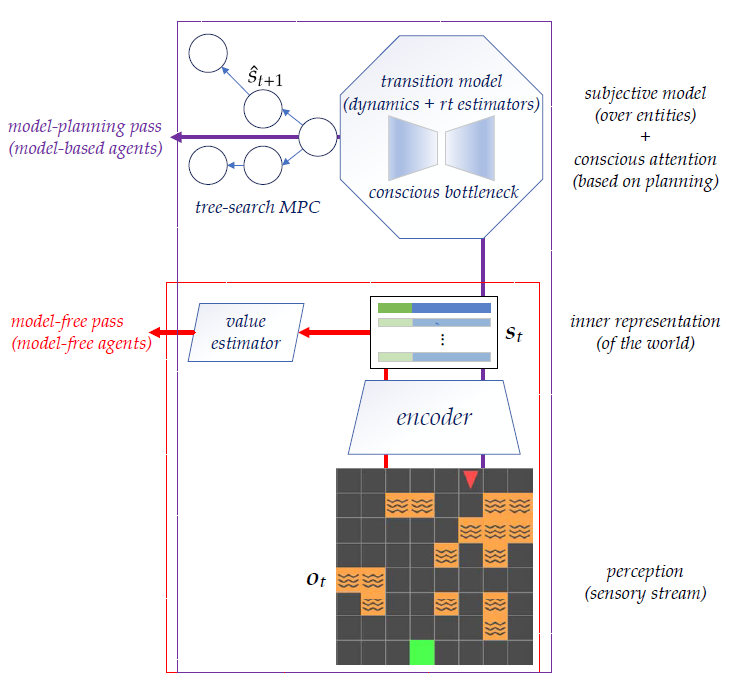

We introduce into reinforcement learning inductive biases inspired by higher-order cognitive functions in humans. These architectural constraints enable the planning to direct attention dynamically to the interesting parts of the state at each step of imagined future trajectories.

Whether when planning our paths home from the office or from a hotel to an airport in an unfamiliar city, we typically focus on a small subset of relevant variables, e.g. the change in position or the presence of traffic. An interesting hypothesis of how this path planning skill generalizes across scenarios is that it may be due to computation associated with the conscious processing of information. Conscious attention focuses on a few necessary environment elements, with the help of an internal abstract representation of the world. This pattern, also known as consciousness in the first sense (C1), has been theorized to enable humans’ exceptional adaptability and learning efficiency. A central characterization of conscious processing is that it involves a bottleneck, which forces one to handle dependencies between very few characteristics of the environment at a time. Though this focus on a small subset of the available information may seem limiting, it may facilitate Out-Of-Distribution (OOD) and systematic generalization toother situations where the ignored variables are different and yet still irrelevant. In this paper, we propose an architecture which allows us to encode some of these ideas into reinforcement learning agents.

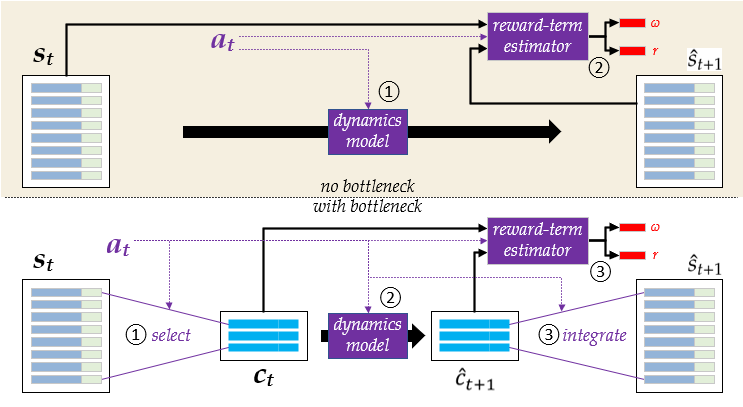

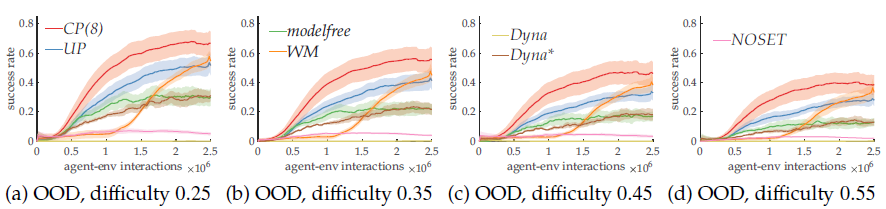

Our proposal is to take inspiration from human consciousness to build an architecture which learns a useful state space and in which attention can be focused on a small set of variables at any time. This idea of “partial planning” is enabled by modern deep RL techniques. Specifically, we propose an end-to-end latent-space MBRL agent which does not require reconstructing the observations, as in most existing works, and uses Model Predictive Control (MPC) for decision-time planning. From an observation, the agent encodes a set of objects as a state, with a selective attention bottleneck mechanism to plan over selected subsets of the state (Sec. 4). Our experiments show that the inductive biases improve a specific form of OOD generalization, where consistent dynamics are preserved across seemingly different environment settings (Sec. 5).

Interesting Q & As:

Q: Can you define what you mean by OOD in this work?

A: We focus on skills transferrable to totally different environments with consistent dynamics. This means the environmental dynamics that is sufficient for solving the in-distribution training tasks and the OOD evaluation tasks are consistently preserved, while the rest can be very different. Intuitively, we want to train our agent to be able to plan routes in the home city and expect this ability to be generalized to totally different municipality. The places can be very different, but the route planning skill depends on the knowledge that is quite universal.

Q: Why do you employ a non-static setting where environments change every episode?

A: Intuitively, the agent has no need to understand the dynamics of the task if the environment is fixed. Learning to memorize where to go and where not to is far easier than reasoning about what may happen. With the challenges, it became necessity for the agent to indeed learn and understand the environment dynamics, which is crucial for OOD evaluation.

Q: How do you avoid collapse in the state representation?

A: Apart from the training signal for dynamics, whose exclusive existence might cause collapse, all other training signals go through the common bottleneck of the encoder thus the representation is also shaped by the TD signals, the reward-termination prediction signals. We also want to mention that the regularizations participate in this as well.

Q: Why not use richer architectures for experiments?

A: Since there are already many components in the proposed agent, to isolate possible factors that would influence the agent behaviors, we use the minimal architecture sizes that marginally enables good RL performance on our test settings.

Q: Why not use MCTS as the search method for decision-time planning?

A: Unlike AlphaGo, which employs MCTS, our architecture is based on the simplest baseline DQN. DQN uses a value-estimator based greedy policy instead of a parameterized one as the actor-critic architecture used in AlphaGo. The DQN policy is deterministic w.r.t. the estimated values therefore the planning cannot take sufficient advantage of the sample based MCTS.